How to Monitor MySQL Using OpenTelemetry

MySQL is a widely used open-source database management system that is the backbone for many desktop, mobile, web, and cloud applications. It’s best known for being the ‘M’ in the still-prevalent LAMP stack (Linux, Apache, MySQL, PHP) and is often used as a supporting database for various web applications such as E-commerce, CMS, CRM, and forums.

As we move forward into 2024, it’s important to reassess your monitoring strategy. Your strategy should be designed to adapt to the changing demands of distributed hybrid and multi-cloud architectures. It should be flexible and comprehensive enough to monitor the rest of your stack.

The Benefits of Monitoring MySQL

There are several key benefits to be aware of as you weigh how much effort you want to dedicate to implementing a solution. Here are some of the key benefits:

- Provides the context to optimize database performance by monitoring resource contention and query performance

- Exposes user trends to pinpoint friction points in your attached application

- Surfaces critical vulnerabilities by capturing anomalous usage patterns

- Enables rapid root-cause analysis and resolution through the collection and layering of metrics of log data

- Provides an audit trail for database changes and user activity, provides the meat impact analysis

Related Content: How to Monitor SQL Server with OpenTelemetry

What Signals Matter?

It’s crucial to capture the 4 golden signals to ensure uptime for your database and attached application. In the MySQL context, this means capturing a representative set of the following signals:

- Query Performance

- Query response time/latency

- Slow/Long running queries

- Query Errors

- Throughput:

- Queries per second

- Transactions per second

- Resource Utilization:

- CPU Usage

- Memory Usage

- Disk I/O

- Network I/O

- Connections:

- Max Connections

- Active Connections/Threads

- Running Connections/Threads

- Failed Connections

- Replication:

- Replication latency

- Replication errors

- Database Growth

- Database growth rate

- InnoDB Row Operations

- Storage Engine (for InnoDB):

- Buffer Pool Usage

- InnoDB Row Operations

- Database Errors

- Configuration and operational errors

The Case for Monitoring MySQL with OpenTelemetry

Though there are many solutions teams can implement, such as the built-in MySQL Enterprise Monitor for example, OpenTelemetry provides the means to collect signals in parity with existing solutions but pulls ahead as the best long-term solution for those mulling over a fresh monitoring and observability strategy for MySQL and the rest of their stack.

The project's primary goals of data ownership and vendor-neutrality alone make it worth considering, but the maturity and comprehensive toolset of offers make it a clear leader. Any effort spent in OTel now will yield dividends later in the form of cost and time savings and flexibility as monitoring needs of your organization grow and change.

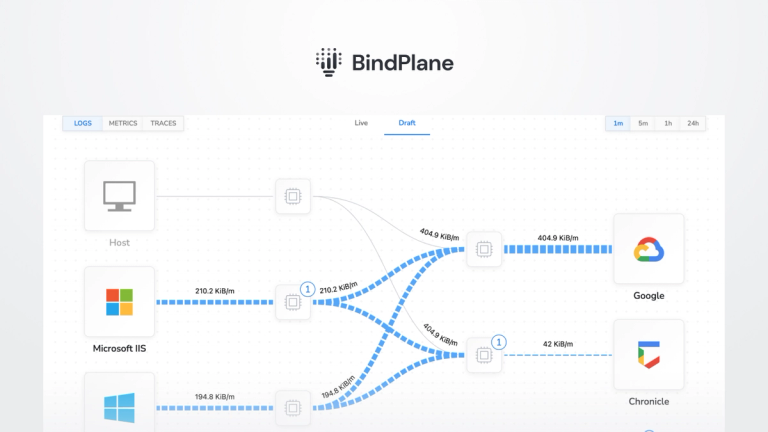

Core Components of the OpenTelemetry Collector

As a quick primer, the OpenTelemetry Collector has a few primary components that facilitate data collection, processing, and transmission of the above signals. Here’s a quick breakdown:

- OpenTelemetry Collector: a lightweight data collector that can be deployed as an on-host agent (this is how we’ll be using it to monitor MySQL) or as a gateway for other collectors, shipping data to one or many configured destinations. The collector has a few primary components:

- Receivers: collect telemetry from a specific application or system (like MySQL) or another OpenTelemetry collector via OTLP.

- Processors: transform the data by providing the levers to enrich, filter, mask (PII), and other data refinement techniques. Advanced users can utilize OTTL to do really interesting transformations.

- Exporters: transmit telemetry to another destination: another OpenTelemetry collector, to file, to an observability/SIEM backend

Each component can be logically connected as a pipeline, the collector’s configuration file.

- mysqlreceiver collects MySQL database/instance metrics

- hostmetricsreceiver collects operating system and specific process metrics

- filelogreceiver captures logs from the specified file path(s). These events can be processed and turned into structured log data like JSON.

Related Content: OpenTelemetry in Production: A Primer

Implementation: Monitoring MySQL with OpenTelemetry

Prerequisites

Before starting, there’s a few things you’ll need:

- MySQL instance (5.7+) running on a Linux or Windows VM with admin privileges.

- To collect MySQL logs (optionally), you must enable the 3 log types (error, general query, slow query) in your configuration file. Here are the steps to do so:

- Have a backend ready to go as a destination for your MySQL monitoring data. For this example, I’m using Google Cloud Operations. If you choose Google Cloud Operations, you’ll need you'll need:

- Service account (and corresponding JSON key) in your project with the following roles:

- Logs Writer

- Monitoring Admin

- Set the full path to your JSON key file in the GOOGLE_APPLICATION_CREDENTIALS environment variable using the methods mentioned above on your MySQL host

- Other means of authenticating are available depending on your setup.

- Service account (and corresponding JSON key) in your project with the following roles:

Installing the OpenTelemetry Collector

For this example, we’ll use the contrib distribution of the OpenTelemetry collector. Generally, we recommend ‘contrib’ as it provides all the necessary components to kick the tires on the bleeding-edge components the project offers. We'll be installing the OTel Collector on the same host as our MySQL instance.

Linux:

- Follow the steps for Linux here. When running commands, replace 'otelcol' with 'otelcol-contrib' as the otelcol version does not include the MySQL receiver or Google Cloud exporter. Here’s an example for Debian:

1sudo apt-get update

2sudo apt-get -y install wget systemctl

3wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.98.0/otelcol-contrib_0.98.0_linux_amd64.deb

4sudo dpkg -i otelcol-contrib_0.98.0_linux_amd64.debWindows:

- Download the most recent 'otelcol-contrib' tarball for Windows from the releases linked in the getting started docs.

- Extract the tarball to a directory where you'd like the executable to run. I’d recommend downloading 7-zip, or you can use the Windows tar PowerShell command:

1tar -xvzf .\otelcol-contrib_0.99.0_windows_amd64.tar.gz- Create an empty file in the collector's root directory called config.yaml. This will be used as the collector's configuration file. (This is a required step for Windows, but not Linux deployments)

Configuring the OpenTelemetry Collector

Next, you can open the config.yaml for your collector and begin adding and configuring the abovementioned components. Note, for Linux, edit and overwrite the configuration file that was automatically created:

1vim ./etc/otelcol-contrib/config.yamlMySQL Receiver

- Add the mysqlreceiver to the receivers section.

- Set MYSQL_USERNAME and MYSQL_PASSWORD environment variables.

Modify config.yaml to add this receiver configuration (see steps below)

1receivers:

2 mysql:

3 endpoint: localhost:3306

4 username: $MYSQL_USERNAME

5 password: $MYSQL_PASSWORD

6 collection_interval: 60sYou may have to change the default endpoint to match your environment.

Host Metrics Receiver

- Add the hostmetrics receiver to your collector configuration.

- Call out the specific metrics the host receiver should gather or use the defaults provided in the example.

1receivers:

2 hostmetrics:

3 scrapers:

4 filesystem:

5 metrics:

6 system.filesystem.utilization:

7 enabled: true

8 memory:

9 metrics:

10 system.memory.utilization:

11 enabled: true

12 network:

13 metrics:

14 system.network.conntrack.count:

15 enabled: true

16 system.network.conntrack.max:

17 enabled: true

18 paging:

19 metrics:

20 system.paging.utilization:

21 enabled: trueFile Log Receiver

- Add filelog receiver to your collector configuration.

- Configure the receiver to point at the log files you enabled on your MySQL instance.

1receivers:

2 filelog:

3 include:

4 - /var/log/mysql/mysql_error.log

5 - /var/log/mysql/mysql.log

6 - /var/log/mysql/mysql-slow.logGoogle Cloud Exporter

- Add the Google Cloud exporter to your collector configuration.

1exporters:

2 googlecloud:Assemble the Telemetry Pipeline

Now, create a pipeline in the service configuation, referencing the components we called out above. Make sure to call out the appropriate receiver in the 'metrics' or 'logs' pipeline, where applicable.

1service:

2 pipelines:

3 metrics:

4 receivers: [mysql,hostmetrics]

5 exporters: [googlecloud]

6 logs:

7 receivers: [filelog]

8 exporters: [googlecloud]Set Environment Variables and Modify Service File

Lastly, set the credentials as an environment variable, matching the variable specified in the configuration file.

Linux:

- Edit the service to add environment variables:

1systemctl edit --full otelcol-contrib.service1[Service]

2Environment=MYSQL_USERNAME=(your mysql username)

3Environment=MYSQL_PASSWORD=(your mysql password)Windows:

- Use the setx command to set environment variables:

1setx MYSQL_USERNAME "(value)" /m

2setx MYSQL_PASSWORD "(value)" /mRunning the OpenTelemetry Collector

After adding each receiver/exporter and constructing your pipeline, in your config you should look like this:

1receivers:

2 mysql:

3 endpoint: localhost:3306

4 username: $MYSQL_USERNAME

5 password: $MYSQL_PASSWORD

6 collection_interval: 60s

7

8 hostmetrics:

9 scrapers:

10 filesystem:

11 metrics:

12 system.filesystem.utilization:

13 enabled: true

14 memory:

15 metrics:

16 system.memory.utilization:

17 enabled: true

18 network:

19 metrics:

20 system.network.conntrack.count:

21 enabled: true

22 system.network.conntrack.max:

23 enabled: true

24 paging:

25 metrics:

26 system.paging.utilization:

27 enabled: true

28

29 filelog:

30 include:

31 - /var/log/mysql/mysql_error.log

32 - /var/log/mysql/mysql.log

33 - /var/log/mysql/mysql-slow.log

34

35exporters:

36 googlecloud:

37

38service:

39 pipelines:

40 metrics:

41 receivers: [mysql,hostmetrics]

42 exporters: [googlecloud]

43 logs:

44 receivers: [filelog]

45 exporters: [googlecloud]Just so you know, you might configure your receivers, exporters, and any processors differently depending on your environment and monitoring needs.

You can always configure more exporters if you'd like to send telemetry to multiple destinations.

Linux

- Run the collector by restarting the service:

1systemctl restart otelcol-contrib- You can check the health of the service with:

1systemctl status otelcol-contrib- You can check the collector log output with:

1journalctl -u otelcol-contribWindows

1.\otelcol-contrib.exe --config .\config.yamlAdding a processor

- You can differentiate multiple MySQL hosts by including the hostname gathered by the Resource Detection Processor:

1receivers:

2 mysql:

3 endpoint: localhost:3306

4 username: $MYSQL_USERNAME

5 password: $MYSQL_PASSWORD

6 collection_interval: 60s

7

8 hostmetrics:

9 scrapers:

10 filesystem:

11 metrics:

12 system.filesystem.utilization:

13 enabled: true

14 memory:

15 metrics:

16 system.memory.utilization:

17 enabled: true

18 network:

19 metrics:

20 system.network.conntrack.count:

21 enabled: true

22 system.network.conntrack.max:

23 enabled: true

24 paging:

25 metrics:

26 system.paging.utilization:

27 enabled: true

28

29 filelog:

30 include:

31 - /var/log/mysql/mysql_error.log

32 - /var/log/mysql/mysql.log

33 - /var/log/mysql/mysql-slow.log

34

35processors:

36 resourcedetection:

37 detectors: ["system"]

38 system:

39 hostname_sources: ["os"]

40

41exporters:

42 googlecloud:

43

44service:

45 pipelines:

46 metrics:

47 receivers: [mysql,hostmetrics]

48 processors: [resourcedetection]

49 exporters: [googlecloud]

50 logs:

51 receivers: [filelog]

52 processors: [resourcedetection]

53 exporters: [googlecloud]Viewing MySQL OpenTelemetry Data

If you follow the detailed steps above, the following MySQL metrics (and logs) will be available in your Google Cloud Operations Metrics and Logs Explorer. Some may only be collected if your MySQL instance's corresponding functionality is active.

Check out the MySQL receiver readme and documentation for more configuration and metric options.

Follow this space to keep up with our future posts and simplified configurations for various sources. For questions, requests, and suggestions, contact our support team at info@observiq.com.