observIQ

Your Unified

Telemetry Platform

Reduce your observability costs by 40% or more, route your telemetry from any source to any destination, and manage your entire fleet of agents.

Introducing BindPlane OP

BindPlane OP is designed to help solve some of the most common problems that enterprises face when it comes to analyzing, reducing, and managing their data.

- Reduce log and metric volume

- Route data to the right destination

- Edit, mask & encrypt sensitive data

A Complete Observability Pipeline

Empowering the modern observability team by transforming and simplifying telemetry operations to improve efficiency and ease-of-use.

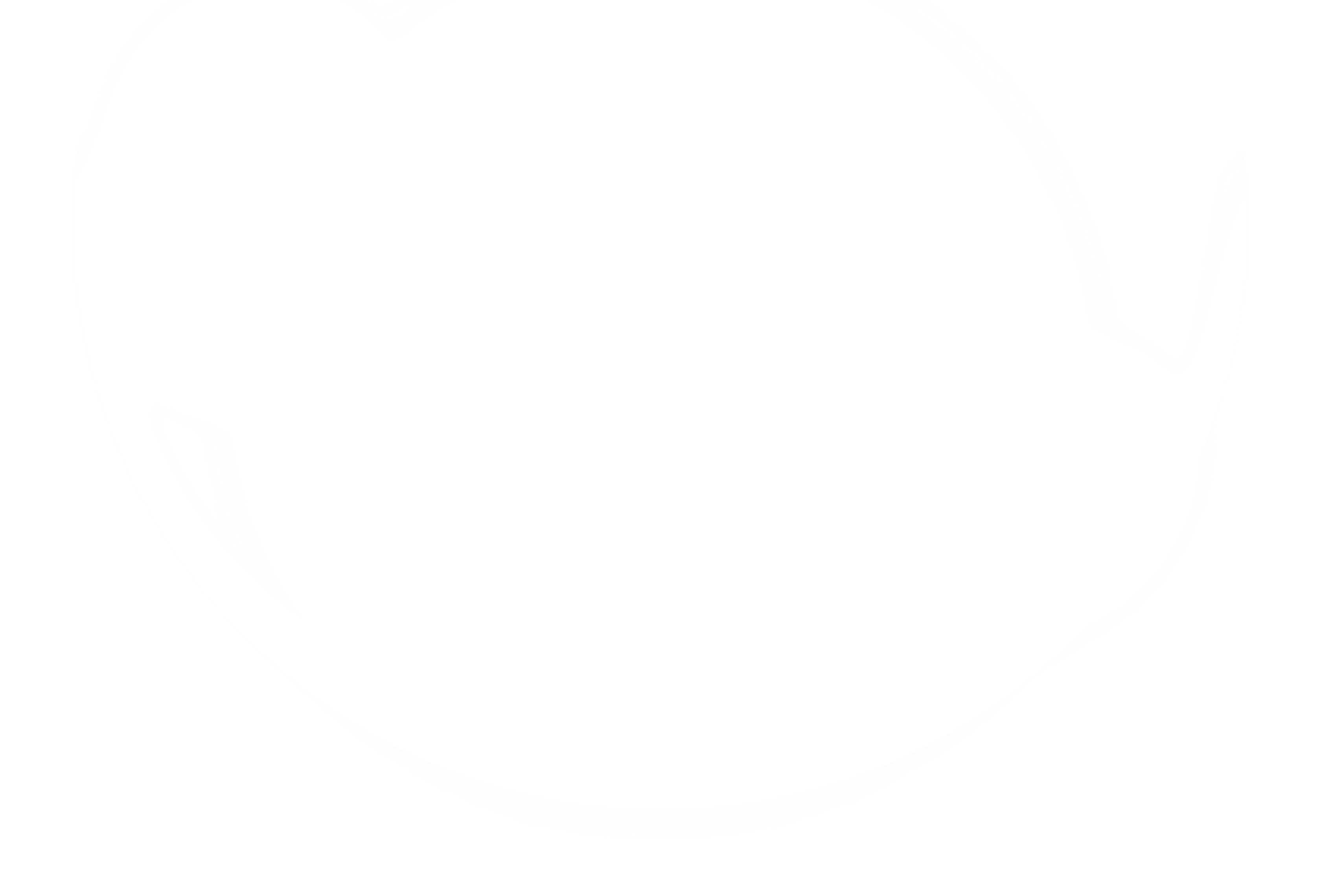

Reduce

Trim log volume via filtering, route compliance data to affordable storage, and analyze only high-value logs.

Standardize

Standardize your observability on OpenTelemetry, an open source standard, and avoid vendor lock-in.

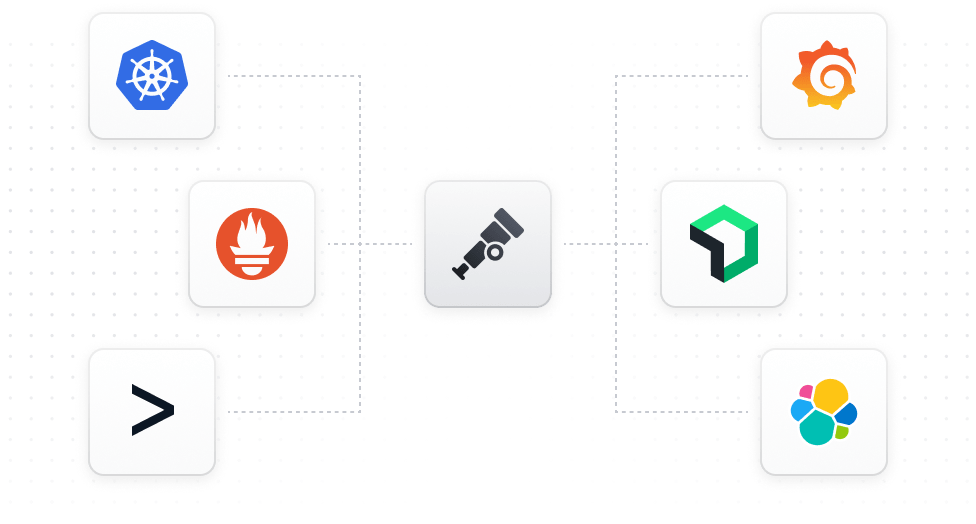

Enterprise Grade

Designed for complex environments with high availability, RBAC, SSO, 24/7 support, and enterprise scalability.

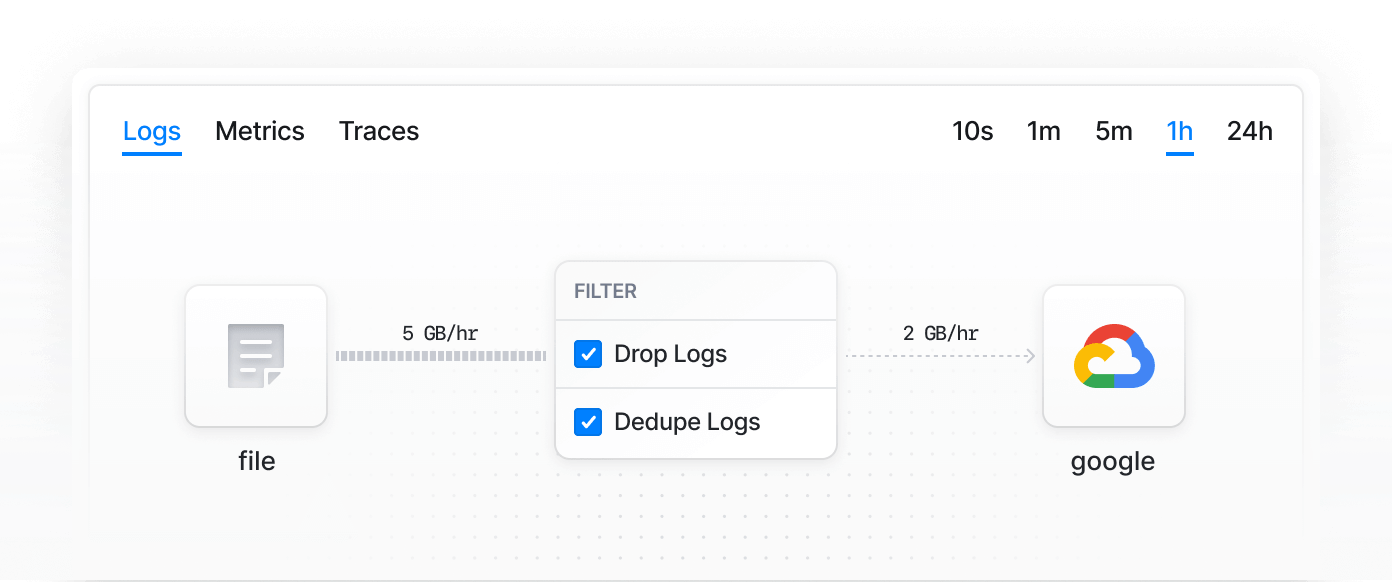

Simplify

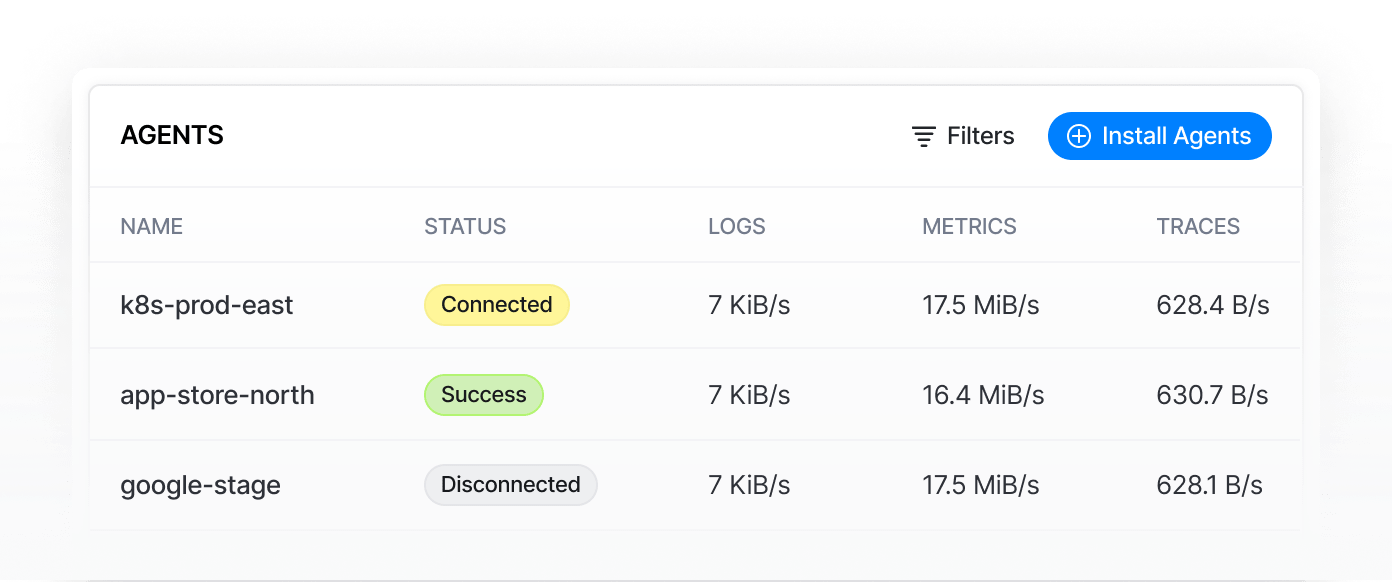

With BindPlane, the era of multiple agents ends. Now, gather metrics, logs, and traces using just one agent.

Customizable Solutions for Your Needs

Get flexible solutions that fit your environment and give you full control of your observability data.

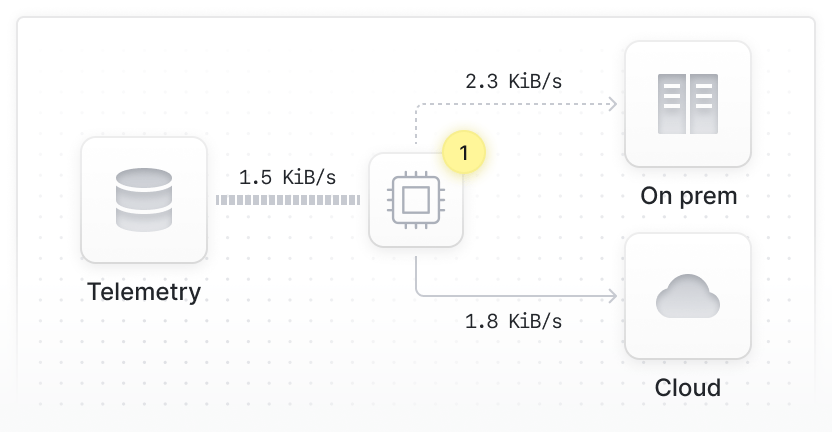

1

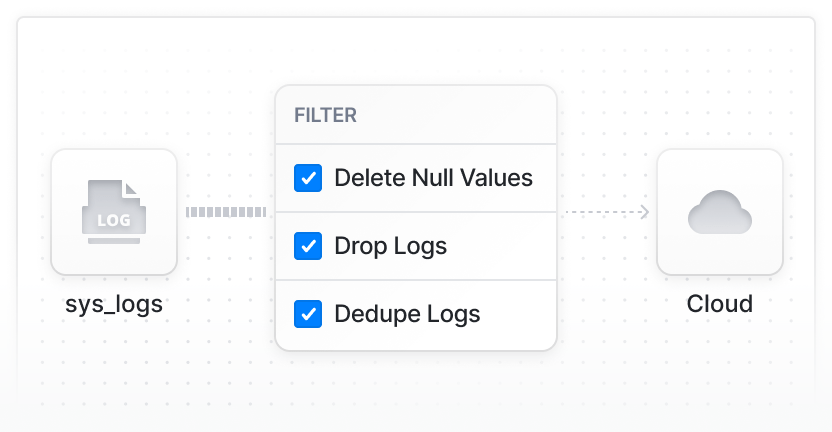

Reducing log volumes

Address overwhelming log data with BindPlane. We efficiently filter unnecessary log data, aiding resource management and enhancing system performance.

2

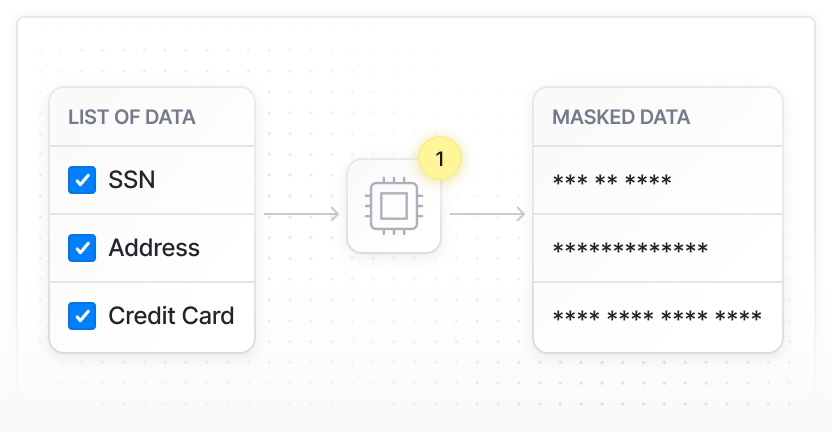

Redact and mask sensitive data

Remove or encrypt sensitive data before it leaves your servers, making compliance and security simple.

3

Streamline cloud migration

Transition to the cloud seamlessly with BindPlane. We make cloud migration smooth and efficient, reducing downtime and ensuring data continuity.