Deciphering Complex Logs With Regex Using BindPlane OP and OpenTelemetry

Preface

Parsing logs with regex is a valuable technique for extracting essential information from large volumes of log data. By employing this method, one can effectively identify patterns, errors, and other key insights, ultimately streamlining log analysis and enhancing system performance.

Prerequisites

- BindPlane OP & a BindPlane Agent (Custom OpenTelemetry Collector)

- A complex log file needing custom parsing

- Knowledge of Regex

- A selection of log samples that match all possible variations.

- (Optional) A good regex testing tool such as regex101.com

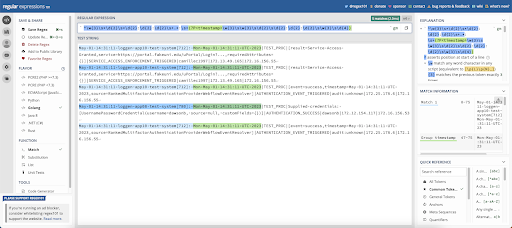

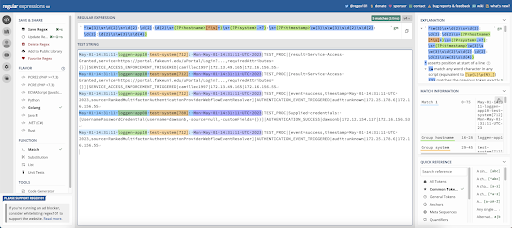

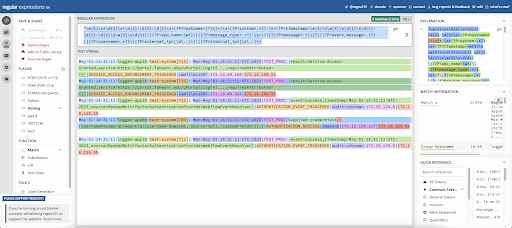

- Here is a link to regex101 for the examples from the blog: https://regex101.com/r/6hhy6K/4

Complex Log Data Samples

In this post, we’ll examine log entries that resemble the examples provided below. By utilizing a script to write these entries to a file with the current timestamps, we can effectively work with real-time data.

1May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[result=Service Access Granted,service=https://portal.fakeuni.edu/uPortal/Login?...,requiredAttributes={}]|SERVICE_ACCESS_ENFORCEMENT_TRIGGERED|camillec1997|172.13.49.165|172.16.156.55

2

3May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[result=Service Access Granted,service=https://portal.fakeuni.edu/uPortal/Login?...,requiredAttributes={}]|SERVICE_ACCESS_ENFORCEMENT_TRIGGERED|camillec1997|172.13.49.165|172.16.156.55

4

5May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[event=success,timestamp=May 01 14:31:11 UTC 2023,source=RankedMultifactorAuthenticationProviderWebflowEventResolver]|AUTHENTICATION_EVENT_TRIGGERED|audit:unknown|172.25.178.6|172.16.156.55

6

7May 01 14:31:11 loggen-app08 test-system[780]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|Supplied credentials: [UsernamePasswordCredential(username=dawsonb, source=null, customFields={})]|AUTHENTICATION_SUCCESS|dawsonb|172.12.154.117|172.16.156.53

8

9May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[event=success,timestamp=May 01 14:31:11 UTC 2023,source=RankedMultifactorAuthenticationProviderWebflowEventResolver]|AUTHENTICATION_EVENT_TRIGGERED|audit:unknown|172.25.178.6|172.16.156.55Dissecting The Data

We can now take the first log entry above, and start dissecting it into sections that we wish to parse out.

First, we’ll notice that we have two timestamps:

1May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[result=Service Access Granted,service=https://portal.fakeuni.edu/uPortal/Login?...,requiredAttributes={}]|SERVICE_ACCESS_ENFORCEMENT_TRIGGERED|camillec1997|172.13.49.165|172.16.156.55The second timestamp is the one we will preserve to become our official timestamp, because it contains more information (timezone and year are useful, while the day of week isn’t really) that we can use to achieve the highest precision.

Breaking this down, we will write a non-capturing pattern to match the first timestamp.

^\w{3}\s\d{2}\s\d{2}:\d{2}:\d{2}\s+The caret “^” anchors to the start of the line. This is followed by “\w{3}”, which captures the 3 letter month abbreviation. After the month, is “\s\d{2}\s”, which is to capture a space; the 2 digit, 0 padded day of the month; and another space. Finally, we have “\d{2}:\d{2}:\d{2}\s+” – for 2 digit hour, 2 digit minute, 2 digit second, and 1 or more spaces.

For the second timestamp, we want a named capture group. This will become a named field in the JSON blob of parsed out fields.(?P<timestamp>\w{3}\s\w{3}\s\d{2}\s\d{2}:\d{2}:\d{2}\s\w{3}\s\d{4})

We’ve named this capture group “timestamp”. It contains the same basic regex as the other timestamp, with the addition of “\w{3}\s” at the start to capture the abbreviated day of the week, and “\s\w{3}\s\d{4}” replacing the “\s+” at the end in order to capture the 3 character timezone, and the 4 digit year.

Going further into the log message, we will want to parse out the hostname and the system:

1May 01 14:31:11 loggen-app10 test-system[712]: Mon May 01 14:31:11 UTC 2023|TEST_PROC|[result=Service Access Granted,service=https://portal.fakeuni.edu/uPortal/Login?...,requiredAttributes={}]|SERVICE_ACCESS_ENFORCEMENT_TRIGGERED|camillec1997|172.13.49.165|172.16.156.55In this message, our hostname is loggen-app10, and our system is test-system[712]. I was not told what the [712] was when I received these logs. I made the assumption that it is the PID (process ID), but I chose not to parse it out separately for now. Parsing these fields is fairly simple, and we end up with: “(?P<hostname>[^\s]+)\s+(?P<system>.*?):\s+”. We have a pair of named capture groups, hostname and system.

The pattern for hostname is “[^\s]+”, which says capture any non-space character and capture as many of them as you can (greedy). This is followed by “\s+”, which captures at least one, but as many as possible (greedy again) space(s).

The capture group for system is even easier, because after the space(s) we capture everything up to a colon character. To do this, we use “.*?”. What that pattern says is, capture any character 0 or more times, but don’t be greedy.

After that, we have the colon character and another 1 or more spaces greedy. These aren’t captured, but are needed to pad out between this section and the timestamp section we wrote above.

This results in the following starting pattern:

^\w{3}\s*\d{2}\s*\d{2}:\d{2}:\d{2}\s+(?P<hostname>[^\s]+)\s+(?P<system>.*?):\s+(?P<timestamp>\w{3}\s\w{3}\s\d{2}\s\d{2}:\d{2}:\d{2}\s\w{3}\s\d{4})

I won’t go through the entire pattern creation process, but I continue to chunk it up as I did above. The resulting final pattern is:

^\w{3}\s*\d{2}\s*\d{2}:\d{2}:\d{2}\s+(?P<hostname>[^\s]+)\s+(?P<system>.*?):\s+(?P<timestamp>\w{3}\s\w{3}\s\d{2}\s\d{2}:\d{2}:\d{2}\s\w{3}\s\d{4})\|(?P<app_name>\w*)\|((?P<message_type>.*?):\s+)?\[?(?P<message>.*)\]?\|(?P<event_message>.*?)\|(?P<username>.*?)\|(?P<external_ip>[\d\.:]*)\|(?P<internal_ip>[\d\.:]*)

This final pattern includes the following named capture groups, which become fields in our JSON blob of parsed data:

- hostname

- system

- timestamp

- app_name

- message_type

- message

- event_message

- username

- external_ip

- internal_ip

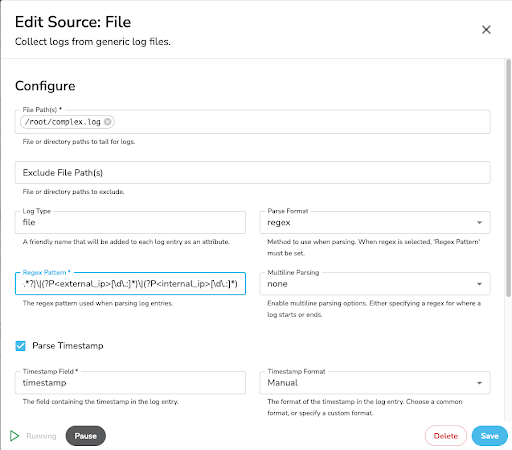

Implementing The Regex

In BindPlane, I create a File source. This looks at my generated log file in /root/complex.log. I’ve selected regex under the Parse Format. Under the Regex Pattern, I put in the final pattern above. I’ve checked the box for Parse Timestamp, chosen Manual for the format, and put in the ctime parsing codes for my timestamp’s pattern. Once done, it looks like this:

Sending & Verifying The Data

To complete the testing, I need to create a destination and check the data there. For my use case, I’ve chosen a Google Cloud Logging destination.

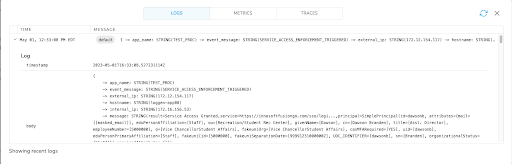

Once my pipeline configuration is complete, I attach it to an agent. After it has run for a few moments, I click the “View Recent Telemetry Button” on the agent’s page.

The telemetry view shows me the following parsed log:

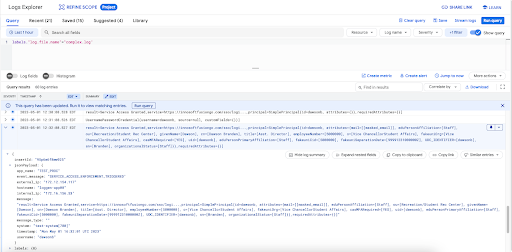

Finally, I check it on the Google Cloud Logging console as well:

This displays the same log entry, and it has a jsonPayload of our body’s JSON map object from the recent telemetry view.

Next Steps

For next steps, I would want to look at parsing that message value. It is frequently a key/value set; as it is in the screenshots & samples above. I could pass the data onward to a processor that parses these key/value entries into another layer of JSON. In the above example, body.message would get parsed back into itself, and you could have fields such as:

- body.message.result=Service Access Granted

- body.message.service=https://innosoftfusiongo.com/sso/logi…

- body.message.principal=SimplePrincipal(id=dawsonb, attributes={mail=[dawson.branden@fakeuni.edu], eduPersonAffiliation=[Staff], ou=[Recreation/Student Rec Center], givenName=[Dawson], cn=[Dawson Branden], title=[Asst. Director], employeeNumber=[5000000], o=[Vice ChancellorStudent Affairs], fakeuniOrg=[Vice ChancellorStudent Affairs], casMFARequired=[YES], uid=[dawsonb], eduPersonPrimaryAffiliation=[Staff], fakeuniCid=[5000000], fakeuniSeparationDate=[99991231000000Z], UDC_IDENTIFIER=[dawsonb], sn=[Branden], organizationalStatus=[Staff]})

- body.message.requiredAttributes=””

Even this could be parsed further by putting a body.message.principle through a key/value parser as well.

Now, someone is bound to wonder, “Why didn’t you just use regex parsing of the body.message subfields as well?” The answer: It is too inconsistent. The regex would be incredibly, and unreasonably, complex when we have the capability to parse key/value pairs already.

Conclusion

Many forms of data can be found in log files. This data often needs to be parsed to make it both more easily readable for humans and easier for automation and tooling later in the chain to act upon.

While the example I worked with was performed on a simple file log, the techniques herein can be used on any log stream. In addition to regex parsing, BindPlane also supports json, xml, key/value pairs, and character-separated values. With the use of processors, these parsers can be chained together to parse embedded data and manipulate it all into a usable format.