How to Manage Sensitive Log Data

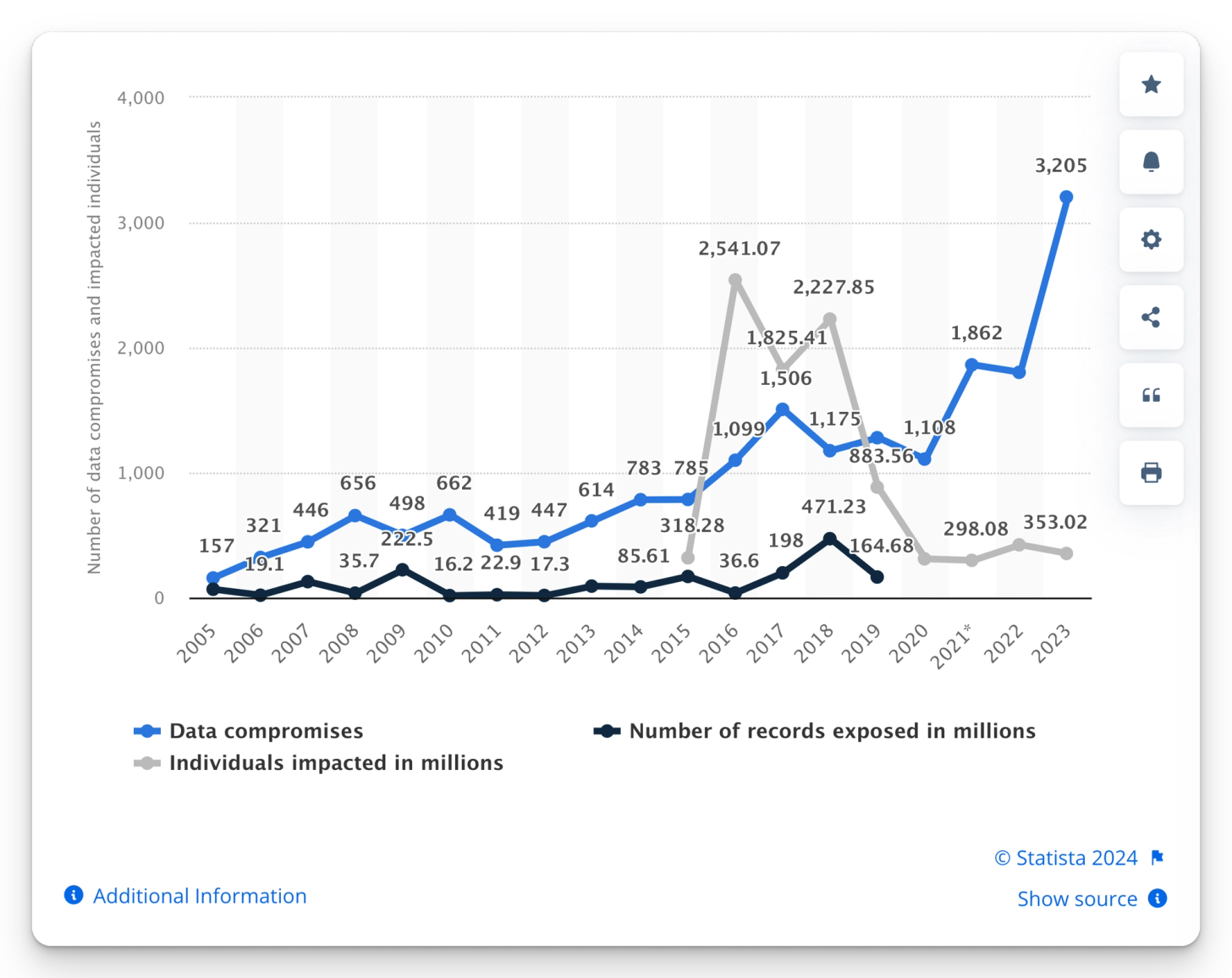

According to Statista, the total number of data breaches reached an all-time high of 3,205 in 2023, affecting more than 350 million individuals worldwide. These breaches primarily occurred in the Healthcare, Financial Services, Manufacturing, Professional Services, and Technology sectors.

The mishandling of sensitive log data provides an on-ramp to various cyber-attacks. Compromised credentials, malicious insiders, phishing, and ransomware attacks can all be initiated with sensitive data stored in log files.

As an organization, keeping sensitive logs safe is extremely critical. If you don't, it can have heavy consequences that impact your business and the people you work with, like partners, customers, and employees.

Plus, as application and system architecture continue to grow, so does the amount of sensitive log data. Finding a good way to handle all this data securely is essential.

In this post, I’ll guide you through:

- The risks of logging sensitive data

- Applicable regulations and standards

- Best practices for managing log data

- Tips to achieve logging compliance

- Tools to manage sensitive log data

What is Sensitive Log Data?

Sensitive log data refers to any information captured in log files that could potentially cause harm to an organization if exposed to an unauthorized party. Some of the key categories include:

- Personally Identifiable Information (PII) includes names, addresses, social security numbers, phone numbers, and email addresses.

- Financial Information: Credit card numbers, bank account numbers, or other financial transaction details.

- Protected Health Information (PHI): Medical records, health insurance information, or any data related to an individual's health status.

- Authentication Information: usernames, passwords, API keys, or session tokens that could be used to gain unauthorized access to systems or applications.

- Confidential Business Information: Trade secrets, proprietary algorithms, intellectual property, and other financial information.

- Encryption keys: Any keys used to encrypt or decrypt sensitive data, which could compromise the security of the encrypted information if exposed.

- Sensitive URLs or API endpoints: Log entries that reveal sensitive API paths or contain sensitive data within the URL structure.

Related Content: Turning Logs into Metrics with OpenTelemetry and BindPlane OP

What are the Risks of Logging Sensitive Data?

When you don't handle sensitive data logs carefully, it can lead to serious problems for both organizations and individuals. Here's what could happen:

Data Breaches

Suppose someone gets into your sensitive logs without permission; they could access PII, financial data, and intellectual property. Breaches like this could have an enormous monetary impact on your business and hurt the trust of your customers, partners, and employees.

For example, a report by Ponemon in 2023 found that the average cost of a data breach worldwide was about 4.5 million dollars, and nearly 9.5 million dollars in the United States alone.

Compliance Violations

Sensitive data exposure may violate laws and regulations such as GDPR, HIPAA, and SOX. Breaking these rules can result in major fines, ranging from thousands to millions of dollars, depending on how bad the breach was.

For instance, since 2003, the Office of Civil Rights has settled or imposed penalties for HIPAA violations totaling more than $140 million dollars in about 140 cases.

Reputational Damage

If your data leaks, people will lose trust in your organization, harming your public image and competitive advantage. Another way to think about it is one and done. In fact, a report from IDC in 2017 showed that 80% of consumers in developed countries will jump ship from a business that accidentally exposed their PII.

So, it’s crucial to handle sensitive data logs with care to avoid these kinds of problems.

What Laws Apply to Sensitive Log Data and Logging Compliance?

Several key pieces of legislation have been passed-- implementing frameworks that directly affect how sensitive log data is managed. These frameworks require businesses to comply with specific requirements or face significant penalties.

Laws and Regulatory Frameworks

- The General Data Protection Regulation (GDPR - EU): mandates the protection of personal information through data minimization, retention limits, access controls, and encryption.

- The Health Insurance Portability and Accountability Act (HIPPA - US): establishes requirements for access control, audit controls, data integrity, authentication, encryption, and retention of ePHI.

- The Sarbanes-Oxley Act (SOX - US): mandates the maintenance and retention of audit trails and logs that could affect financial reporting and compliance.

- The Federal Information Security Management Act (FISMA - US): requires that federal agencies develop, document, and implement an information security and protection program.

- The Gramm-Leach-Bliley Act (GLBA - US) mandates that financial institutions protect confidential consumer information, including logs and audit trails. GLBA

Please note that there are many state and provincial regulations to understand and consider as well.

What are the Standards that Apply to Sensitive Log Data?

In addition to laws and regulatory frameworks, several security standards help guide organizations with specific requirements for managing sensitive data. Compliance with these standards is not legally mandated, but vendors often require proof of certification, demonstrating a commitment to security and proper controls. Here is a list of some of the most notable standards:

- The Payment Card Industry Data Security Standard (PCI DSS): is an internationally agreed upon standard put forth by the ‘big 4’ credit card companies. Often confused as a federal regulation, it prohibits logging full credit card numbers and CVV codes and requires masking, encryption, and tight access controls.

- Service Organization Control 2 (SOC 2): is a set of standards specifically tailored for companies that store customer data in the Cloud. It’s built around five “Trust Service Criteria”: Security, Availability, Processing Integrity, Confidentiality, and Privacy.

- ISO/IEC 27001 is a widely recognized international standard for implementing an Information Security Management System (ISMS). It includes requirements for maintaining logs and performing regular log reviews.

- National Institute of Standards and Technology (NIST SP 800-92): These guidelines provide guidelines related to secure log management and sensitivity considerations for sensitive log data.

Logging Compliance vs. Certification

A quick note about compliance vs. certification -- as these terms are occasionally used interchangeably (incorrectly).

In this context, Compliance refers to adhering to regulatory requirements or optional standards mentioned above.

Certification means stepping through a defined process where a third party validates your implementation and issues a certification. For example, for HIPAA, you can acquire a certification, but only compliance is mandated by law. Although certification tends to be optional, it is a useful way to ‘check the boxes’ and ensure your organization is aligned correctly.

Next, let’s move down a level and discuss some best practices for managing sensitive data and tips for achieving logging compliance.

Related Content: Reducing Log Volume with Log-based Metrics

Best Practices for Managing Sensitive Log Data

These best practices apply to the regulations and standards mentioned above.

- Map Sensitive Log Data: create a map of your sensitive log data identifying its source, location, and sensitivity level; corresponding to the regulations and standards that pertain to your business.

- Encryption: ensure all log data is encrypted during transmission and at rest with TLS or mTLS depending on where it's being delivered.

- Isolate Sensitive Log Data: tag and route sensitive log data to separate buckets, indexes, or back-ends/analysis tools. This makes it easier to apply different levels of monitoring and analysis, identify anomalous behavior, and mitigate the risk of exposing sensitive information.

- Implement role-based access controls (RBAC): utilize role-based access controls to limit exposure to sensitive log data to only the necessary teams or tools that need it. Within each role, implement minimized, least-privileged access.

- Structure your Logs: Adding standardized structure to your data makes detecting and identifying sensitive information easier. These clear structures also make log output easier for your software developers.

- Obfuscate Sensitive Log Data in Code: Tokenize and mask sensitive information like usernames, passwords, IDs, and credit card numbers before it is logged.

- Obfuscate Sensitive Log Data before Analysis: most observability and SIEM tools build-in functionality to filter, mask, and route sensitive log data. If it isn’t handled in code, use the tools available in your observability

- Code reviews: make data sensitivity checks included in regularly scheduled code reviews.

- Leverage Automated Tooling: utilize automated tooling to detect potentially sensitive information in your code. Github, for example, can automatically scan and notify and potentially notify on the existence of sensitive data-exposing patterns with secret scanning patterns.

- Log Testing and Validation: on the backside, include manual checks to identify unencrypted or sensitive data in logs as part of regression and/or acceptance testing.

- Retain Audit Logs Securely (longer than required, but not forever): Regulations dictate specific requirements for how long audit logs must be stored. But for many scenarios, audit logs provide useful context to investigate anomalous behavior and compromised systems. In some instances, systems are compromised for years without detection. Having audit data available that goes 6-12 months can be useful.

- Focused Training: Make a concerted effort to train your employees on the importance of managing sensitive data at regular intervals.

Tips for Logging Compliance and Certification

Following the logging security best practices are a good way to ensure compliance, but it’s not a comprehensive list. When talking to customers, we generally recommend the following:

- Research the appropriate legislation and regulatory requirements that directly impact your organization's applications and systems

- Align to one or many of the standards outlined above that apply to the domain of your business or application, using standards as a blueprint to help guide your teams with specific actions and tasks.

- Seek certifications, even if they’re optional. HIPAA, SOC2, and PCI DSS have optional certifications but eliminate risks and reduce hurdles as potential customers move forward in the buying process.

- Start your compliance and certification efforts sooner, rather than later. For SOC 2 compliance, it often takes 6-12 months to complete a 3rd party assessment.

- Use compliant observability and SIEM tools with required certifications to analyze your data

- Use a telemetry pipeline to centralize the management of your sensitive log data.

Using a Telemetry Pipeline to Manage Your Sensitive Log Data

Lastly, a quick note on telemetry pipelines. One of the challenges of managing sensitive log data is pulling together and analyzing disparate streams of log data to ensure their compliance.

Sensitive log data can be sourced from different proprietary agents, arriving with inconsistent structure -- with a lack of a centralized management plane, makes it significantly more complex to mask and route data appropriately.

The Case for OpenTelemetry

OpenTelemetry is an open framework that provides a standardized set of components to collect, process, and transmit log data to one or many destinations. Its primary goals are 1. Vendor Agnosticism and 2. Data Ownership, giving its practitioners maximum control of their log data.

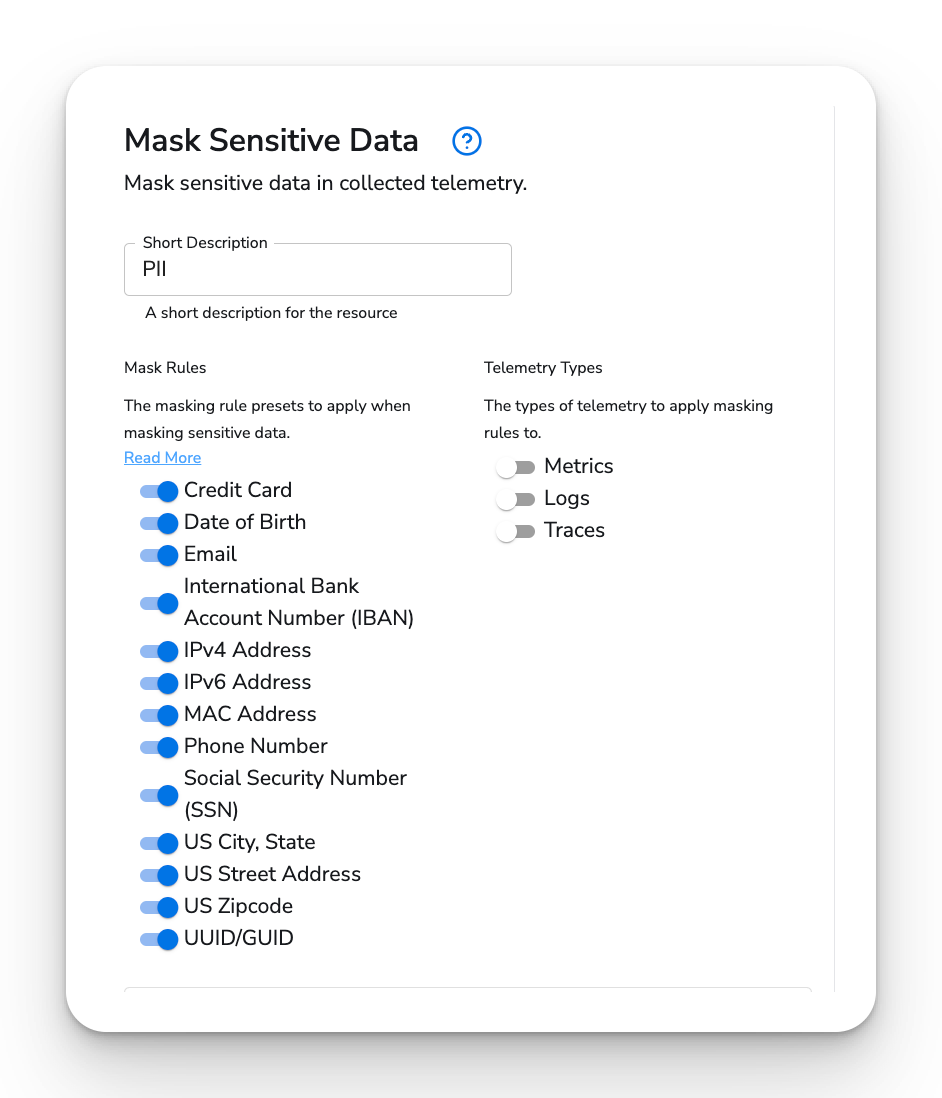

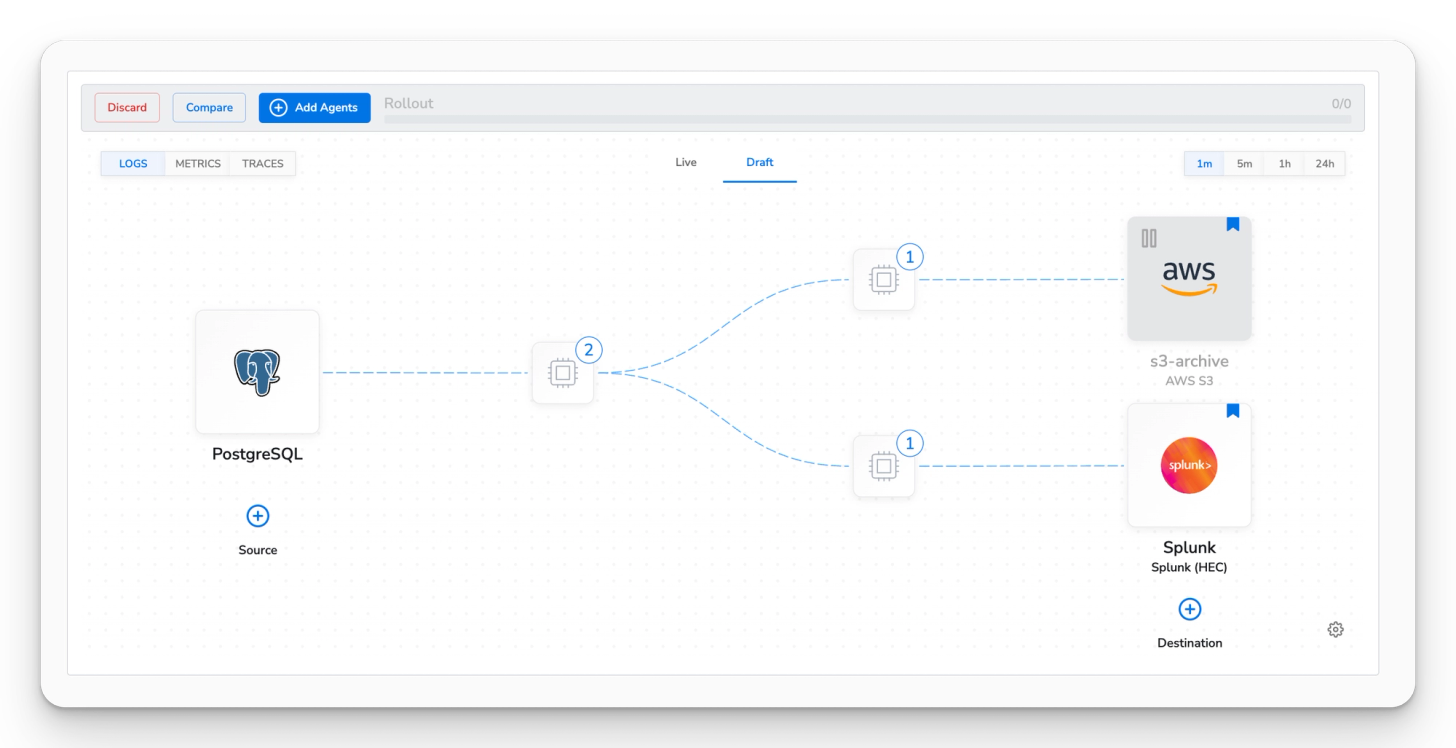

In the context of sensitive log data, it provides the functionality to split and isolate low and high-sensitivity log data streams, as well as filter, mask, and later re-hydrate audit log data for further analysis. Here’s a few of the notable components.

- OpenTelemetry Collector: a light-weight agent/collector that ingests, processes, and ships telemetry data to any back-end

- Processors: are the mechanisms that enable filtering, masking, and routing log data within the OpenTelemetry Collector.

- Connectors: enable combining one or more telemetry pipelines within an OpenTelemetry Collector

- OTTL: an advanced language, enabling advanced transformations of your log data.

Related Content: What is OpenTelemetry?

Building a Telemetry Pipeline with BindPlane OP

BindPlane OP builds on top of OpenTelemetry, and provides a centralized management plane for OpenTelemetry collectors and streamlines the process of filtering, masking, and routing sensitive data.

It also simplifies the processing of routing log data to one or many destinations, and provides a singular view of your telemetry pipeline, enabling visibility and actionability on your sensitive data from a single place.

And that’s a wrap. If you’re interested in learning more about security best practices, OpenTelemetry, or BindPlane OP, head over to the BindPlane OP solutions or reach out to our team at info@osberviq.com.

Ready to supercharge your observability?

Give observIQ a Spin