How to Install and Configure an OpenTelemetry Collector

In the last 12 months, there’s been significant progress in the OpenTelemetry project, arriving in the form of contributions, stability, and adoption. As such, it felt like a good time to refresh this post and provide project newcomers with a short guide to get up and running quickly.

In this post, I'll step through:

- A brief overview of OpenTelemetry and the OpenTelemetry Collector

- A simple guide to install, configure, and ship observability data to a back-end using the OpenTelemetry Collector

OpenTelemetry: A Brief Overview

What is OpenTelemetry?

The OpenTelemetry project (“OTel”), incubated by the CNCF, is an open-source framework that standardizes the way observability data (metrics, logs, and traces) are gathered, processed, and exported. OTel squarely focuses on observability data and unlocks a vendor-agnostic pathway to nearly any back-end for insight and analysis.

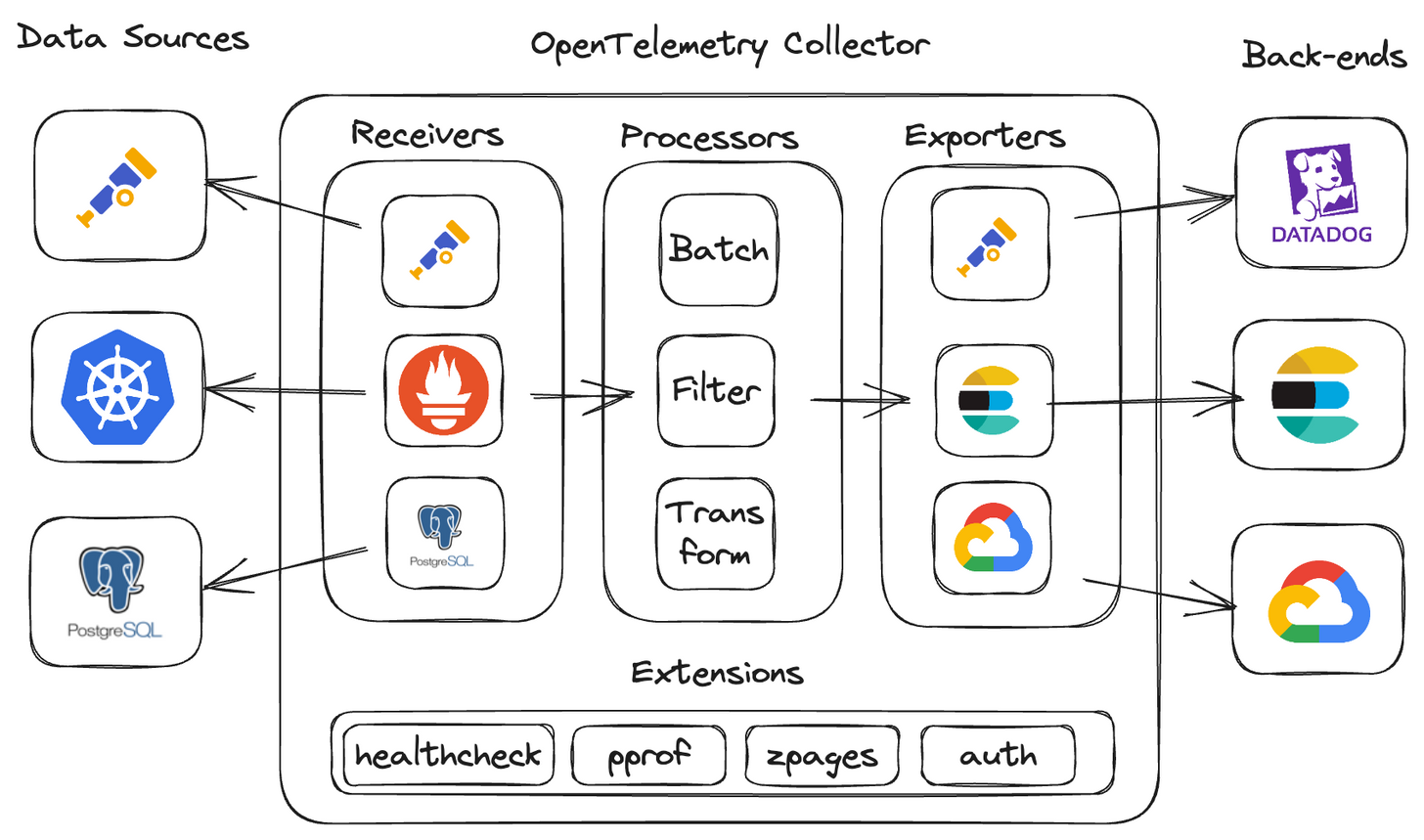

What is an OpenTelemetry Collector?

The OpenTelemetry collector is a service responsible for ingesting, processing, and transmitting observability data. Data is shared between data sources, components, and back-ends with a standardized protocol known as the OpenTelemetry Protocol (“OTLP”). The collector can be installed locally as a traditional agent, deployed remotely as a collector, or as an aggregator, ingesting data from multiple collectors.

Benefits of using an OpenTelemetry Collector

OpenTelemetry offers open-source monitoring tools that gather telemetry data for understanding distributed systems and applications. It helps overcome challenges like using proprietary tools and non-standard configurations. The project promotes a vendor-neutral framework and has gained support from various organizations. It provides flexibility through its collector SDKs, integrations, and distributions, and enables the consolidation of different telemetry pipes into an observability pipeline.

Related Content: OpenTelemetry in Production: A Primer

observIQ's contributions to OpenTelemetry

observIQ has made several significant contributions to the OpenTelemetry Project:

- In 2020, observIQ donated the open-source log agent Stanza to the project. This code was further developed and established as the core logging library for the OpenTelemetry Collector in 2023.

- observIQ has contributed to and improved over 40 Receivers, Processors, and Exporters for popular technologies such as Azure, CloudFlare, NGiNX, and Windows Events for the OpenTelemetry Collector.

- observIQ has made significant contributions to the development of Connectors, which are an important part of the OpenTelemetry collector, facilitating advanced routing and connection between metric, log, and trace pipelines within the collector’s configuration.

- observIQ played a key role in designing and implementing the OpenTelemetry Agent Management Protocol (OpAMP), which enables remote management of the OpenTelemetry collector.

- In 2023, observIQ launched BindPlane OP, a purpose-built observability pipeline for OpenTelemetry.

What are the primary components of the OpenTelemetry collector?

- Receivers: ingest data into the collector

- Processor: enrich, reduce, and refine the data

- Exporters: export the data to another collector or back-end

- Connectors: connect two or more pipelines together

- Extensions: expand collector functionality in areas not directly related to data collection, processing, or transmission.

You can link these components together to create a clear and understandable data pipeline for observability within the collector’s configuration.

Collecting and Exporting Host Metrics and Logs

Let's start by considering the basic but crucial task of monitoring the health and performance of a Linux host running any workload. This involves gathering and sending host metrics and logs to a back-end for visualization and analysis.

How to Get Started

- You’ll need a Linux host with superuser privileges - any modern distribution will work. For this example, I’ve deployed a Debian 10 VM on GCE.

- You'll also need a backend. I've opted for Grafana Cloud because it has a free tier with a native OTLP endpoint for data ingestion, making the configuration easier.

- You'll need a Grafana Cloud <access policy token, <instance_ID>, and <region>. You can set this up by following this link (takes about 5 minutes).

Installing the OpenTelemetry Collector

Start by running the installation command on your host.

1sudo apt-get update

2sudo apt-get -y install wget systemctl

3wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.85.0/otelcol-contrib_0.85.0_linux_amd64.deb sudo dpkg -i otelcol-contrib_0.85.0_linux_amd64.debRemember, you can subsitute ‘0.85.0’ with newer releases as they become available.

Once complete, otelcol-contrib will be added and managed by systemd; the collector will start automatically.

You’ll find the collector configuration file here:

/etc/otelcol-contrib/config.yaml

Related Content: How to Install and Configure an OpenTelemetry Collector

Reviewing the Default Configuration

If you’re already familiar with the default configuration, you can skip the Configuring the Collector section.

The default config.yamll includes pre-configured (optional) components and a sample pipeline to better understand the syntax. Let’s quickly take a look at each section:

cat /etc/otelcol-contrib/config.yaml

Extensions

1extensions:

2 health_check:

3 pprof:

4 endpoint: 0.0.0.0:1777

5 zpages:

6 endpoint: 0.0.0.0:55679- health_check: exposes an HTTP endpoint with the collector status information

- pprof: exposes net/HTTP/pprof endpoint to investigate and profile the collector process

- zpages: exposes an HTTP endpoint for debugging the collector components

Receivers

1receivers:

2 otlp:

3 protocols:

4 grpc:

5 http:

6 opencensus:

7 prometheus:

8 config:

9 scrape_configs:

10 - job_name: 'otel-collector'

11 scrape_interval: 10s

12 static_configs:

13 - targets: ['0.0.0.0:8888']

14 jaeger:

15 protocols:

16 grpc:

17 thrift_binary:

18 thrift_compact:

19 thrift_http:

20 zipkin:- otlp: ingests OTLP formatted data from an app/system or another OTel collector

- opencensus: ingests spans from OpenCensus instrumented applications.

- prometheus: ingests metrics in Prometheus format -- pre-configured to scrape the collector’s Prometheus endpoint

- zipkin: ingests trace data in Zipkin format

- jaeger: ingests trace data in Jaeger format

Processors

1processors:

2 batch:- batch: transmits telemetry data in batches, instead of streaming each data point or event.

Exporters

1exporters:

2 logging:

3 verbosity: detailed- logging: exports collector data to the console. Very useful for quickly determining your config is working

Service

1service:

2 pipelines:

3 traces:

4 receivers: [otlp,opencensus,jaeger,zipkin]

5 processors: [batch]

6 exporters: [logging]

7 metrics:

8 receivers: [otlp,opencensus,prometheus]

9 processors: [batch]

10 exporters: [logging]

11 extensions: [health_check,pprof,zpages]- service: (AKA, “the collector”) where pipelines are assembled. It’s important to know that a component won’t be enabled unless it’s been referenced here.

- pipelines: reference the receivers, processors, and exporters configured above. Some (but not all) components can be shared across pipelines, as seen in the example (otlp, batch, logging).

- extensions: here’s where you enable your extensions that you’ve configured above.

- Note: logging, is the third type of pipeline you can create, but has not been added to the default config

Configuring the Collector

Next, let's update the config:

vim /etc/otelcol-contrib/config.yaml

I followed these steps:

- I removed optional components (for clarity, totally optional)

- I configured the required components

- I constructed both a metrics and logs pipeline

Here's the result (with comments):

1extensions:

2 basicauth: # required to authenticate to the Grafana Cloud OTLP endpoint.

3 client_auth:

4 username: <Grafana Cloud Instance ID>

5 password: <Grafana Cloud Access Policy Token>

6

7receivers:

8 hostmetrics: # collects host metrics from the specified categories

9 collection_interval: 1m

10 scrapers:

11 cpu:

12 disk:

13 memory:

14 network:

15 filelog: # reads log file at the specified path, starting the end of the file

16 start_at: end

17 include: [/var/log/syslog]

18

19processors:

20 batch:

21 resourcedetection: # detects and adds the hostname as resource metadata

22 detectors: ["system"]

23 system:

24 hostname_sources: ["os"]

25

26exporters:

27 otlphttp: # exports data in OTLP format

28 endpoint: https://otlp-gateway-prod-us-central-0.grafana.net/otlp

29 auth:

30 authenticator: basicauth

31

32service:

33 extensions: # reference outside of the pipeline

34 - basicauth

35 pipelines:

36 Metrics: # collects host metrics, batches payload,

37 receivers: [hostmetrics]

38 processors: [batch,resourcedetection]

39 exporters: [otlphttp,logging]

40 Logs: #

41 receivers: [filelog]

42 processors: [batch,resourcedetection]

43 exporters: [otlphttp,logging]Once the config.yaml has been updated, restart the collector review the output in your console:

1systemctl restart otelcol-contrib && journalctl -f --unit otelcol-contribIf all is well, you’ll start to see activity like this in your console, indicating the collector has restarted and data is flowing successfully:

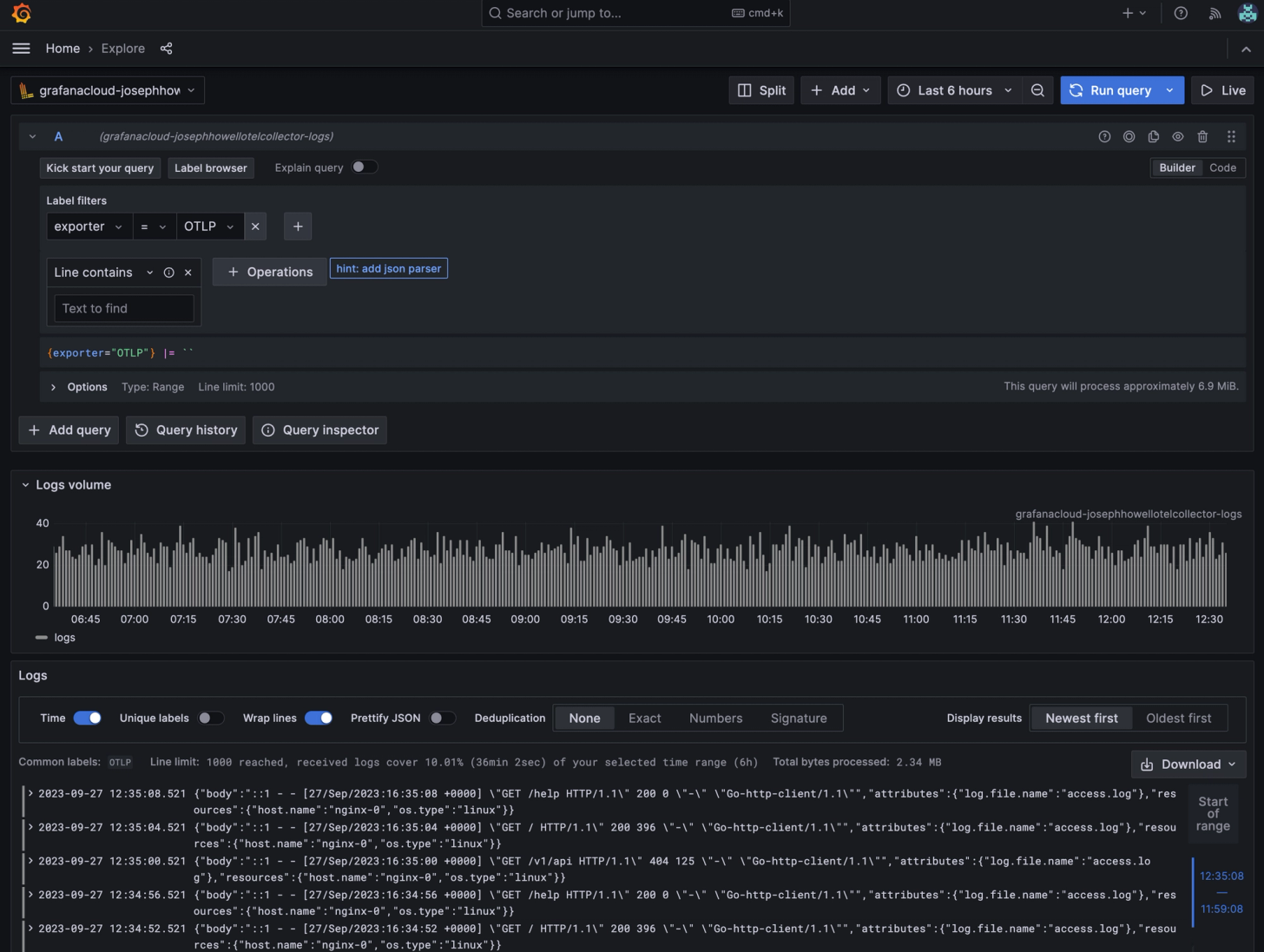

Finding your Observability Data in Grafana Cloud

To access your Grafana Cloud account, open Grafana and go to the Explore console. Grafana Cloud automatically maps and directs OTLP data to Prometheus, Loki, and Jaeger data sources for metrics, logs, and traces.

Note: if you’re running a local instance of Grafana, use the Loki and Prometheus exporters in place of the otlp_http exporter.

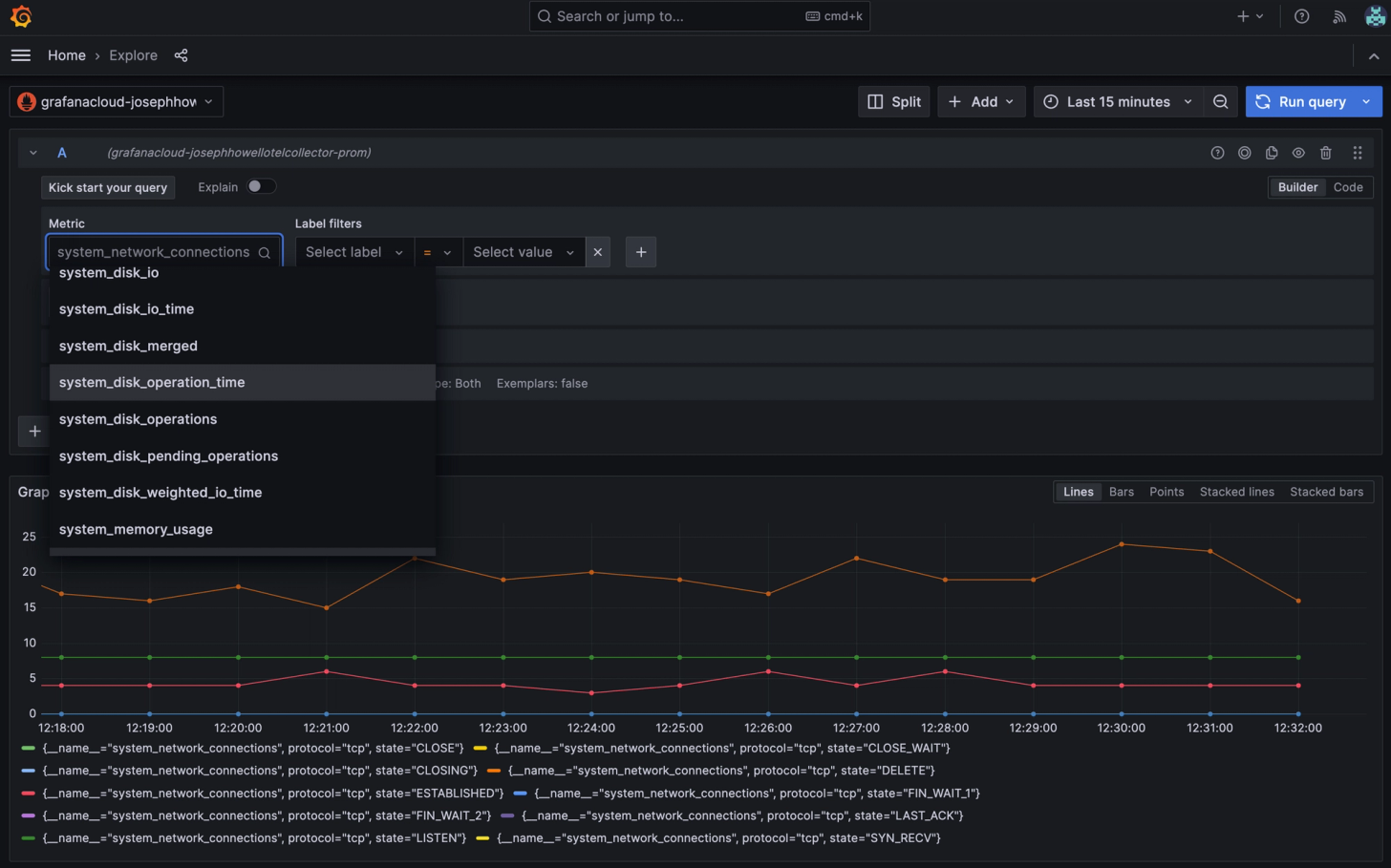

Finding your Metrics

To view your metrics, choose the Prometheus data source linked to your OTLP access policy. The metric names are associated with the groups we specified in the configuration.

Finding your Logs

To view your logs, select the Loki data source associated with your OTLP access policy. Then select then set ‘exporter = OTLP’ as the label filter.

And that’s it! You’ve successfully installed, configured, and shipped observability data to a back-end using the OpenTelemetry collector. From here, you can continue to customize your configuration, build dashboards, and create alerts. I'll dive deep into those topics in a future post.

If you have any questions or feedback or would like to chat about OpenTelemetry and observability, feel free to contact us on the CNCF Slack.

Also, remember to subscribe to our newsletter for more tips, updates, and insights on observability and telemetry.